Caroline Sinders: the power of art and the need for change

The fight for human rights has been battering the nations’ parliaments for decades and one of the forms this fight can take is creativity

by Eleonora Raspi

There are artists whose practice originates and develops in solitary private spaces, who craft the imagery of their own vision on reality. And then there are artists like Caroline Sinders: a machine learning designer, an activist in the field of digital rights, confident in her solid background in photography. In her work, the individual-and-secluded and the collaborative-and-relational coexist and feed off each other in surprising ways, always with human relations and their social ramifications as her theoretical and artistic point of departure. For the past few years, this artist and critical designer has been forging her identity across the fjord-like edges of western-based contemporary artists, tackling the ultimate role of art in our current society, focusing particularly on digital community health and harassment, AI inequity, and discrimination and bias. Here, she gives us her take on art and methodology, as well as the urge for a better and safer design policy, intersectional perspectives in dealing with data, and the overall need for real change in the world of digital conversations. She currently works for the UAL Creative Computing Institute. Sinders has worked with international institutions like the United Nations, Amnesty International, IBM Watson, the Wikimedia Foundation and presented her work at Tate Modern, MoMA PS1, and the Victoria and Albert Museum, among others.

ER: How do you feel your background in visual arts and photography informs your current practice?

CS: Photography and video are the very few mediums that can entice people to believe because their nature is about pure visual mimicry. There’s a kind of rich, guttural space in photography between fact and fiction: that’s where my practice lies. During my time at NYU’s Tisch School of the Arts, I became deeply interested in the tension between truthfulness and falsity, and the idea of creating something that feels real but is actually fabricated. The main thing we were taught in photojournalism classes is understanding that where you place the camera and where those images go have radical impacts on the world. Everything you’re choosing to do becomes a political choice.

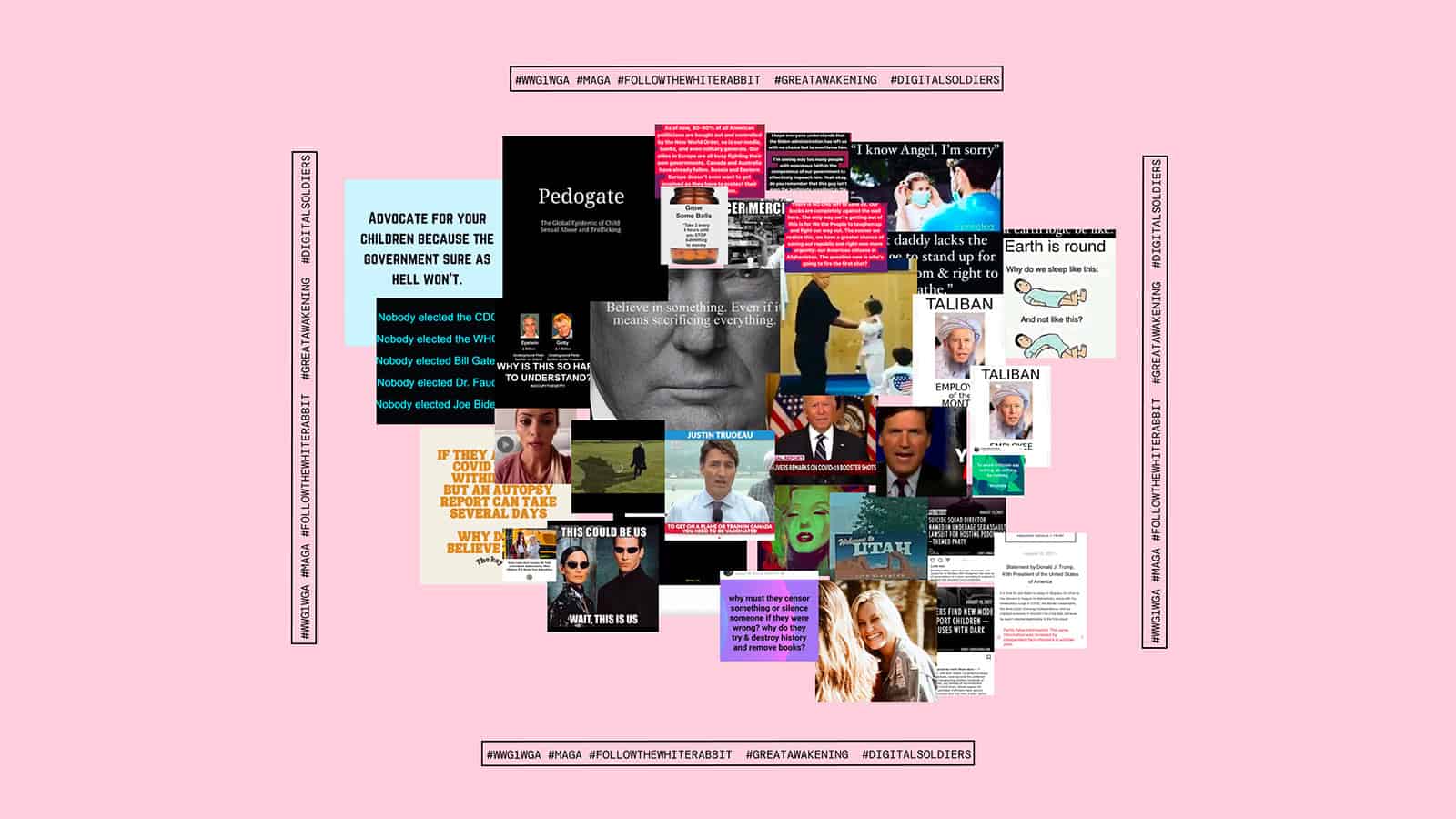

Technology-based art allows you to really play with the duality of real and fake, and then look at the impossibilities of what we think facts are. If you look at my recent work with KW, A Certain Kind of Doom Scroll, I’m collecting many branching conspiracy theories under one particular conspiracy theory (the #savethechildren QAnon conspiracy), choosing Instagram as the ideal means. Social media, in general, is ultimately the downstream manifestation of my take on fact and fiction.

Furthermore, as in Within the Terms and Conditions, or the web VR game Dark Patterns, I’m not at all interested in pure documentation. I can use photogrammetry and gather collage images related to facts, truth, and data, but present them as something much more aesthetically complex. I want to disrupt the flux of facts and uncover them through a series of specific aesthetic choices that the public could give credibility to. Art is a form of truth, sure, but it is representational. It may be a form of understanding that can lend understanding, but there’s a difference between documentation and firsthand experiences with journalism recontextualized in another space. I play with such representation, aware that even if I am documenting reality, all those downstream elements of representation are the ones that really matter.

ER: Let’s dig a bit deeper. Tell us more about your methodology and your idea of what research-driven art is / can / should be.

CS: I used this definition for the first time in 2018, but it has evolved since then. Although all art is somewhat based on research, my art practice is directly shaped by research, and it is fully informed by it. My research-driven art is “human rights—mainly Internet freedom and digital rights—in art,” which is a step further than transition design practice. It doesn’t have to be as investigatory as Forensic Architecture, but it can be something as close to artist Heather Dewey-Hagborg’s projects or as poetic as some of Dred Scott’s works. What I always want to stress with people is that art is just one of the hats that I wear. I hope I never have to choose between doing human rights research and doing art because they both really deeply feed my practice.

My methodology follows three steps: research, then activism or advocacy, and, last, art or aesthetics. I formulate my own rationale on a topic, pointing out why I’m engaging in it and what I believe needs to be changed. This long-term research period can eventually manifest into a workshop, a class series, or a civil society style paper—something tangible that rapidly moves into activism and advocacy, like partnering with groups like the Mozilla Foundation, running campaigns, engaging with the European Parliament or the European Commission, or having conversations with the FTC. The last step for me is aesthetics: I, therefore, aim at creating new imagery or poetic analogies that people can understand. And art, to me, is the best instrument to achieve this goal. Ultimately, If you’re engaging in any kind of activist work, spanning subjects like white supremacy, genocide, or conspiracy theories, I think it’s extraordinarily important that you do not leave anything up to ambiguity; otherwise, you may open yourself up to actually supporting the work you’re trying to comment on or adding a lot more noise to an already noisy conversation.

ER: Whether we want it or not, all speech, thought, and behavior take place with/through/because of others. What are the key elements of a successful collaborative method in your work?

CS: There are practical and emotional advantages to collaboration. The reason I like collaboration is that, because I’m working in a very cross-disciplinary field, it sometimes just makes it easier to speed through things or have someone else providing feedback on what you’re creating. At times it goes much deeper: with bigger collaborations like the one with Hyphen Labs for the Tate work Higher Resolutions, I was able to bring AI into data privacy and transform theoretical ideas into a physical installation. And I don’t think this project could have worked without either of us together.

Above all, I think we learn more when we collaborate with other people. In the act of sharing ideas with someone, at a certain point, you gain momentum, and, in a matter of seconds, the idea goes from being tiny to filling up the entire room. Look at what happened with artist/researcher Anna Riddler: with her, we came up with a complex, machine-learning-generated moving image piece called Cypress Trees, thanks to a few conversations on climate change, deforestation, and political allegories related to trees.

ER: Tackling the core of your work, what relation do you see between design, technology and identity?

CS: I think digital spaces, in general, can offer the ability to augment aspects of our personality, as in the case of game avatars, where people may change their gender at will. Also, in the time of web 3.0 there has been a big, interesting shift from “us being in the anonymous space” to “us using real names.” Why? At least in my case, as a professional working in the field of technology, it is vital to have something tied to your real name for putting yourself out there, known and trustworthy, in a sense. With people’s personal and professional lives more and more intertwined, our LinkedIn, Twitter, and Instagram accounts have become a big facet of our own identity. But, for this very reason, I also think we now need anonymous spaces and rules that allow for that anonymity. Think about the online harassment world and the online activism work: a de-anonymized internet is not necessarily safer, and there are reasons you want to maintain a certain degree of anonymity.

ER: In an article for the Barbican Centre you compared online space with physical cities…

CS: In writing that article, I was dealing with the idea that certain aspects of global web policy cannot apply to the entire world. Even though Facebook, for instance, is using a form of global policy to inform us about its decisions, it is still based in the United States and on a US version of what is free speech versus not. If you look at Europe, there are many post-World War laws that do not allow to show Nazi iconography, but that does not apply to the US. Facebook and other big companies are trying to create pluralistic global spaces, but regulatory blocks and countries have different kinds of rules and values. Many of us try to fit into policy systems that are, simply, not designed for the nuance of five hundred million people. From a healthy, intersectional, privacy-focused Internet freedom, there are too many things going on or to respond to, and I feel we’re getting the worse end of the stick—policies that try to please everyone but instead are pleasing no one. Those systems, when it comes to adjudicating and looking at harm, like harass reporting and having content matters, they’re not scaled up to be safe.

ER: Talking about a useful design policy, what do you think communities need?

CS: It depends on the context, I guess. I’m one of the few people in the digital rights space that has a design background and focuses on how technology is designed and how that impacts users from an online harm standpoint, usually online gender-based violence. Think about all that can be frustrating in creating a harassment report. But I think being a facilitator, co-designing with people, testing with them, and asking them the things that they want is really helpful because they’re the ones filling out the report and going through the process. It allows connecting within a community of people, moving at their speed, and having a better understanding of their needs.

ER: The community-based work is also the foundation of your ongoing project Feminist Data Set. How does this project originate and evolve?

CS: Feminist Data Set is an artistic and critical design project that tackles each step of the AI process, including data collection, data labelling, the algorithmic model, and then the design of how that model is placed in a chatbot. When I started the project in 2017, I was analyzing the political aftermath of Trump’s election, paralleled by the rise of fascist and suprematist ideas in the US. But I was also reflecting a lot on bias in machine learning and “what is machine learning’s role in sort of these spaces of analyzing content?”. I came to the idea to focus on a feminist AI project, with intersectional feminism as my framework to investigate data, bring it together and their content. For example: take an article talking about income inequality on the basis of race and gender. You need to think: “what would be an intersectional way to clean the data? What tools am I going to use?” There are no tools out there; you need to augment them. I realized this was going to be a multi-year project in its scale, but I really liked the framing of it. It’s not only about the data and talking about it—saying how we made this also really matters.

And these same questions percolated through the project itself: the relationship I have with collaborators matters to me and, just as happens in many companies, I had a lot of discussions on corporate ethical teams. Was it equitable for me to hire people to work with on the project for data cleaning? How was I going to clean the data I collected in the workshops? How did I treat collaborators? It will be interesting to see what unfolds over the next few years in terms of feminist audits, AI, and how that will match up with the project.

ER: To conclude, do you believe in revolution? In a recent interview, philosopher Rosi Braidotti argues that “What is necessary is a radical transformation, following the bases of feminism, anti-racism and anti-fascism. An in-depth transformation around the types of subject that we are.” Do you feel your work might resonate with this idea?

CS: First of all, I would ask, “Whose revolution is it? How dedicated are they to change? And, like, what are their motivations?” Sure, we are living in Mark Zuckerberg’s dream, but what is our dream of social media and of technology? I may believe we are living in Elon Musk’s idea of revolution, but what is our idea of revolution? I mean, what is even yours versus mine? I do support the need for a kind of global revolution and agree with Braidotti’s description of an in-depth transformation of society, but it depends very much on motivations and goals.

ER: Your artistic work aims at moving individual and social consciousness, which in itself is already a revolutionary act.

CS: I think the goal—and joy—of art is that you can present new imaginaries. Feminist Data Set project, for example, is an imaginary. One of the things I’m interested in is looking at technology with the idea of scale—when does scale break some of our best intentions we have, like open-source archives or other up-and-coming cooperative tools? I don’t think that technology should exist at the size and scale of Facebook, for instance. So, when does it start to fall apart? In this sense, Feminist Data Set is a metaphor, a small experiment, because I don’t think a company of almost three thousand people could actually engage with design justice and data feminism methodologies. Can they really be just at that size, considering they are working on software that a million people touch? Can that piece of software ever really embody those principles?